This is a long and in-depth guide. The first 4 steps walk you through setting up your Google Cloud account for this project, the final 8 steps revolve around using the StreamEA tool, extracting data from the NLP API, building spreadsheets to view the data, and extrapolating meaning from your data.

If know how to use Google Cloud services and you just want the entity/keyword discovery for SEO bits JUMP THERE NOW.

Table of Contents

- Step 1: Setup a Google Cloud Account

- Step 2: Create a New Cloud Project

- Step 3: Create a New Service Account in Your Project

- Step 4: Create and Download your JSON Key

- Step 5: Find Competitor Pages Outranking Yours

- Step 6: Upload Your Google Cloud JSON Key

- Step 7: Compare Your Page With The First Competitor Page

- Step 8: Fix the “PermissionDenied: 403 Cloud Natural Language API has not been used in project” Error

- Step 9: Compare Your Page With The First Competitor Page (for real this time)

- Step 10: Pull Data and Place in Spreadsheets

- Step 11: Build 2 More Types of Spreadsheets for Analysis

- Step 12: Practical Applications of NLP Data

- Frequently Asked Questions

- Acknowledgements

Introduction and Background

Charly Wargnier (@DataChaz on Twitter) posted about using a free tool called StreamEA he created to help leverage Google’s Cloud Natural Language Processing API to discover keywords in the form of entities that you may not be using your pages but that you probably should by examining top ranking competitor pages. I dove in immediately to test it out and see how his tool worked. We have already been building an NLP process internally, the tool created by Charly helped immensely in making our process more efficient.

One #SEO tip that my entity analysis app StreamEA is rather good at:

– Find the Top 3 ranking pages for your keyword

– Leverage the Google #NLP API to get their entities

– Prioritise the ones w/ higher salience

– Use them in your on-page content ! 🙌👉 https://t.co/9PSacBSXOU https://t.co/PoNVnBLv5R

— Charly Wargnier (@DataChaz) April 18, 2021

A few things you will need to make this work:

- An Active Google Cloud Platform account

- A new project in Google Cloud

- A JSON Key for your project in Google CLoud

- A list of competitor pages to examine

- A spreadsheet software (Excel or Drive) to store your data

This article will walk you through the setup but not all of the technical intricacies of Charly’s system or Natural Language Processing.

Here’s how to use Google’s Natural Language Processing API to help your SEO out for free (mostly)

Step 1: Setup a Google Cloud Account

If you have not already done so, you will need a Google Cloud account to use the NLP API and the tool built by Charly. You can get a free $300 credit but it is limited to 90 days of usage after which you will be charged by Google for usage.

You don’t just need access to Google Cloud, you need it to be active. This means you have to setup the billing and activate your free trial. I honestly would rather give Google as little money as possible, so hopefully another solution comes up soon. However, for now StreamEA uses only the Google NLP API. Google Cloud Pricing is all over the place but right now for the “Cloud Natural Language API” you only have to pay after processing 5,000 units through their system. Read about the NLP API Pricing from Google Cloud here.

You can access Google Cloud here: https://console.cloud.google.com/

Step 2: Create a New Cloud Project

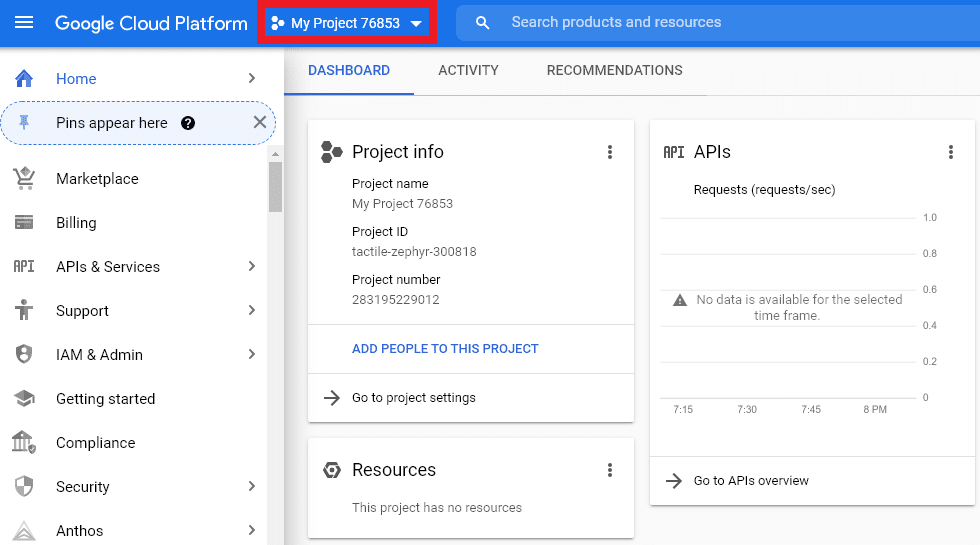

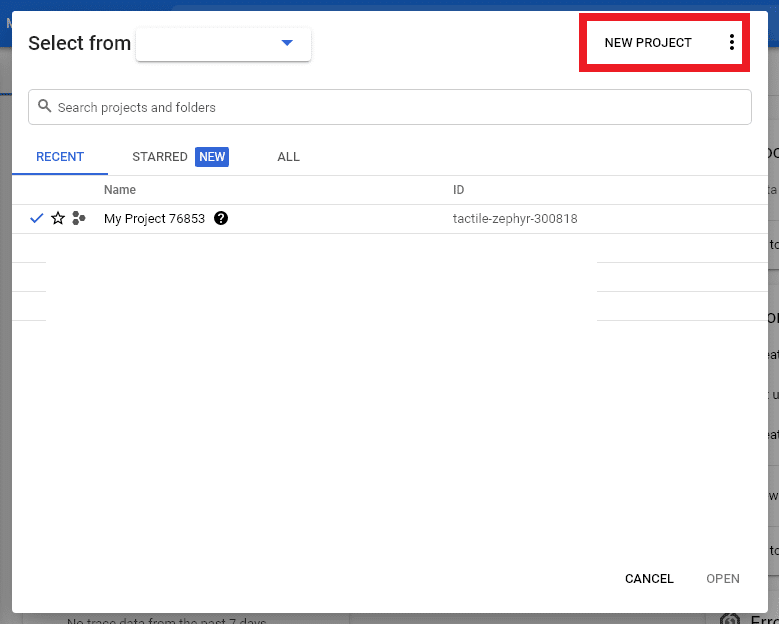

Now that you have your account activated and working you will need to create a new project to use for this tool. Google Cloud may have created a new project for you on setup, if so you will see it called something like “My Project” directly to the right of the Cloud logo. Click this name or the downward arrow next to it to bring up your Projets menu.

This should bring up a menu that displays all of your projects and allows you to start a new project.

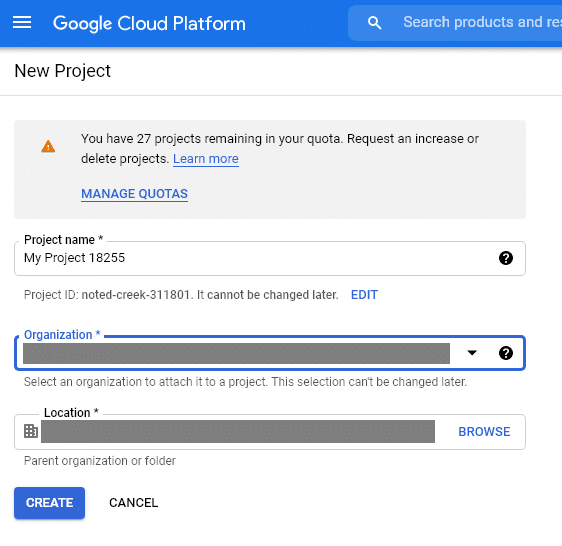

You should see a screen like this that warns you about your quota limit, allows you to give the project a name, and allows you to select the organization for the project as well as the location. One thing you may not notice here is that you can customize the project id even though Google creates one for you. We are going to call ours “My Test NLP Project” for this article.

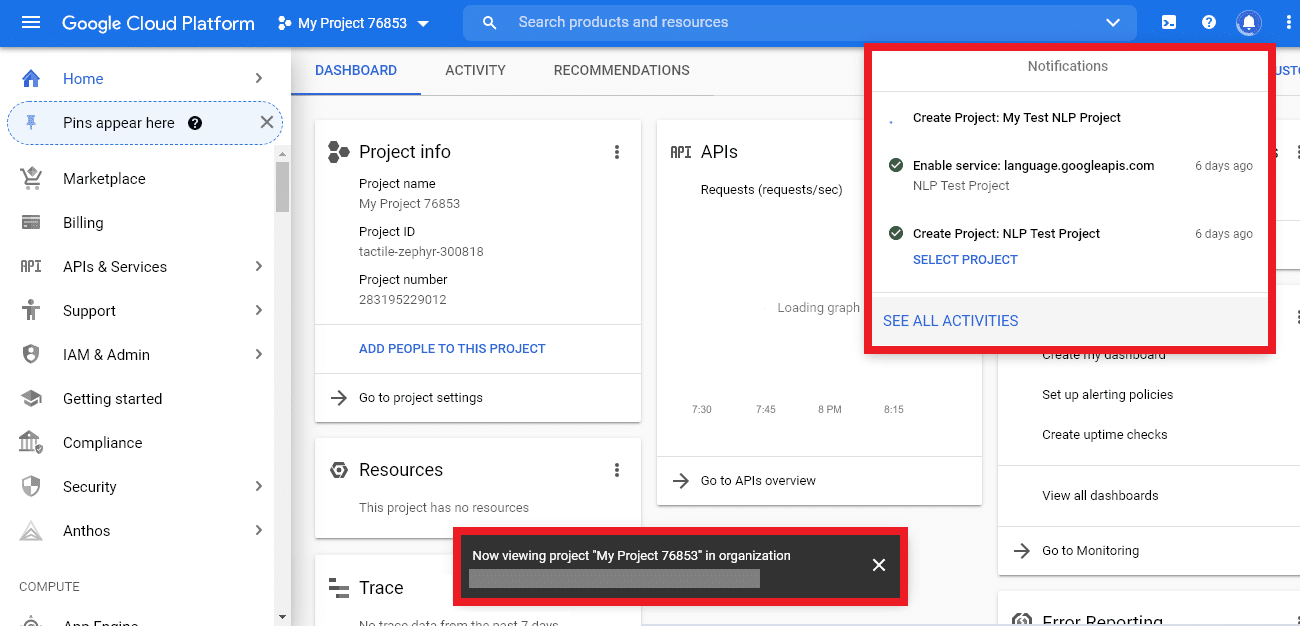

Once you click the “Create” button you will be taken to a Project dashboard and notice notification messages begin popping up from the right-hand corner and that a notice pops up on the bottom of your screen. These messages tell you that you have just created a new project (right-hand corner) and in what organization (bottom). As you can see in the below graphic the notifications also display the past two actions taken in your account. You may notice that you are not taken to the newly created project, but to the last project you viewed instead.

Step 3: Create a New Service Account in Your Project

To use the StreamEA tool you will need to get JSON credentials for a Google Cloud Project.

In the StreamEA tool they give you instructions to “From the Service account list, select ‘New service account” unforutnately these instructions I found of little use as there was nothing I could see in my account or project that appeared to be a “service account list”. This might be due to a recent UX update but thankfully resolving it was fairly easy.

First off make sure you are in the new project we created called “My Test NLP Project”. If you are not in the right project you will actually end up seeing the settings for a different project.

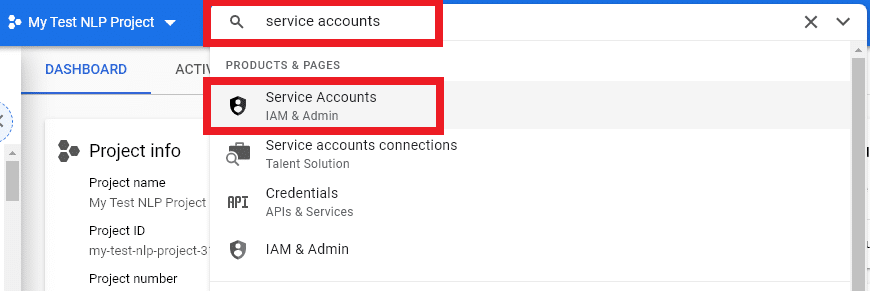

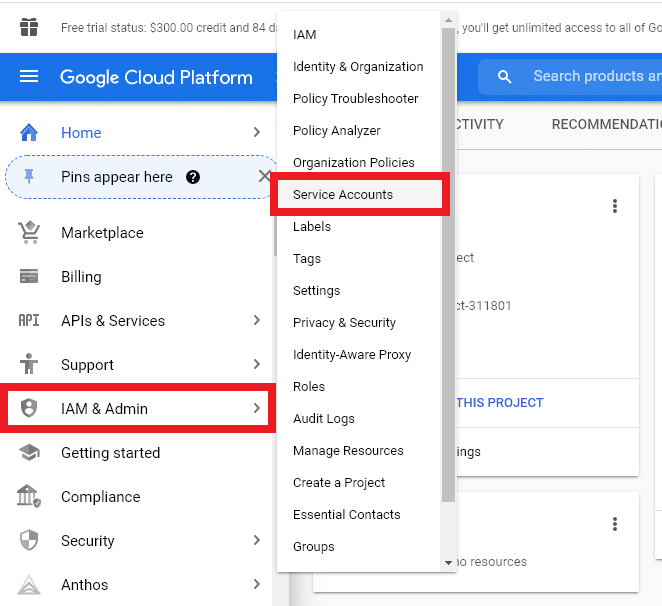

Once in the project you can fnd your “service account list” simply by either typing “service account” in the search bar at the top of the page or by selecting “IAM and Admin” on the left-hand navigation and then “service accounts”.

Here is what it looks like in the search bar

Here is how to get to this from the left-hand navigation

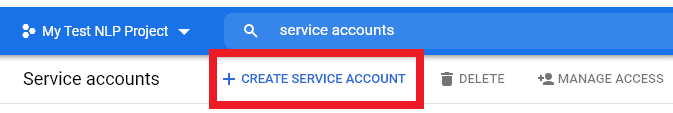

Once here you can create a new service account by clicking the “create service account” button at the top of the page just under the search bar.

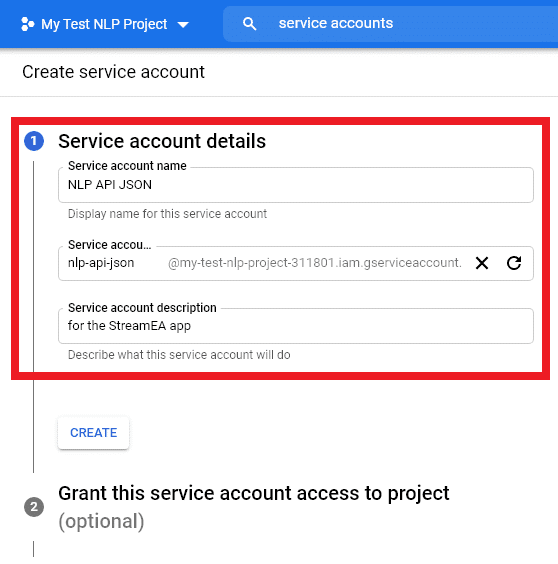

Fill in the service account details and click “create” before moving on to the two optional settings.

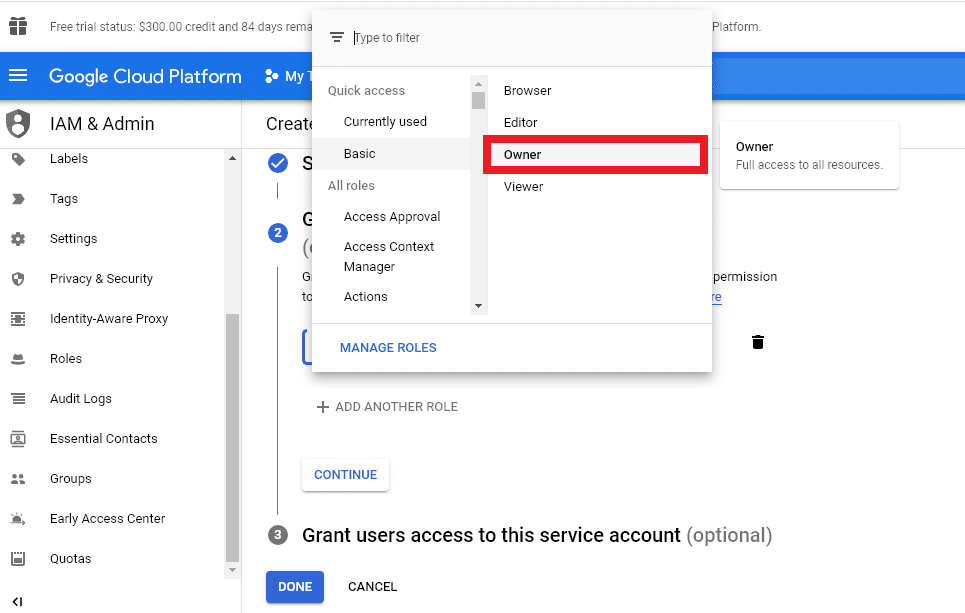

The second setting Google Cloud says is optional, but for the StreamEA app and our NLP needs this is required. If you do not set this the StreamEA app will not work correctly.

Click the downward facing arrow and select “Owner” under the basics menu as seen below.

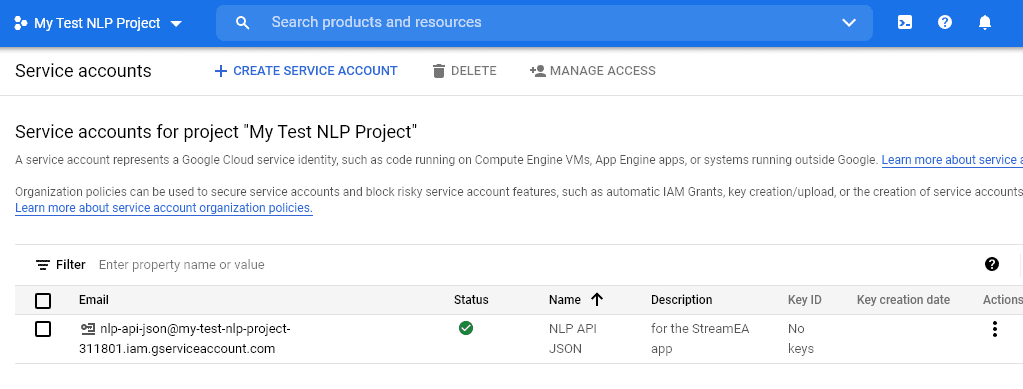

That is the last setting you need to worry about, click the “done” button and your settings for this service account should apply and take you to a screen that looks something like this.

Step 4: Create and Download your JSON Key

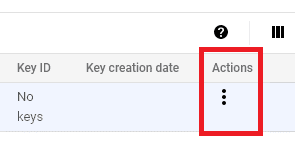

You may notice in the final screen from Step #3 there that your service account says “No keys” under heading “Key IDs”. According to the StreamEA app we need a JSON key to upload for the service to work correctly, but unfortunately again the instructions are not super clear only saying this: “Click create, then download your JSON key”

To get started we are going to click the three dots icon on the far right side of the service account we just created as shown here.

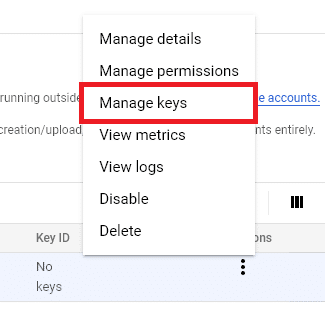

Once this menu opens we are going to select “Manage Keys”

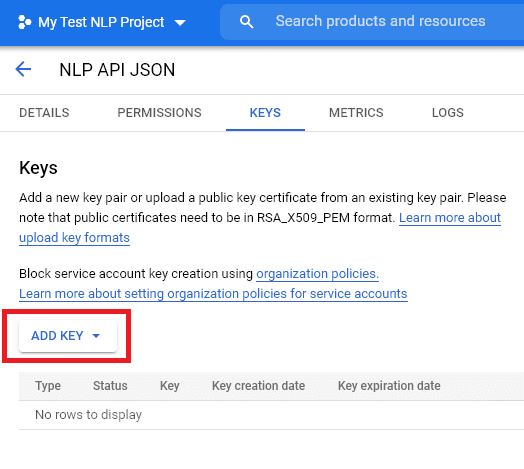

There shouldn’t be any keys listed here yet. We want to create a new key so click on the “Add Key” button.

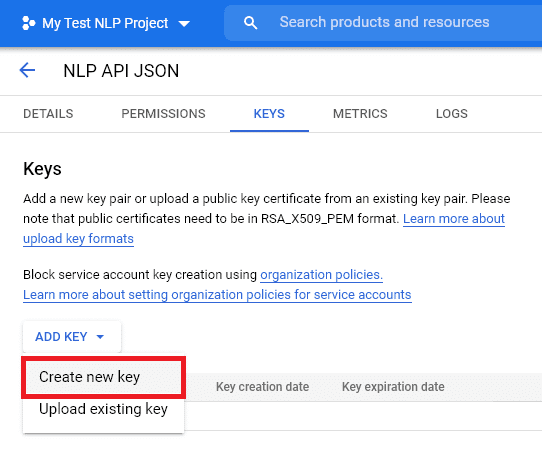

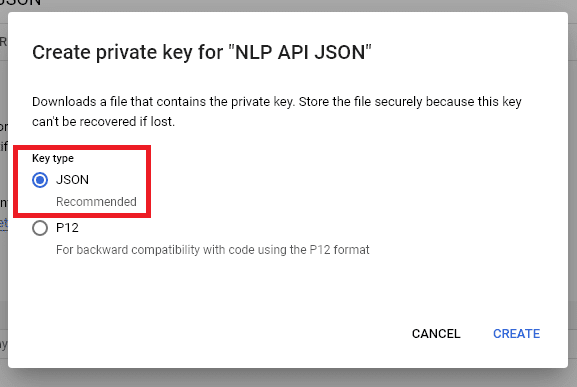

Now select “Create New Key” in the dropdown menu that appears.

This will bring up a popup option to select the type of key. Google Cloud Platform and the StreamEA app both recommend / require JSON so make sure to keep that selection.

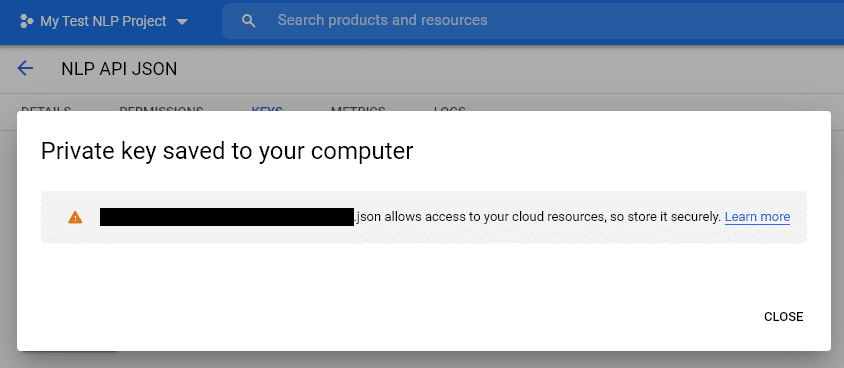

Once you click the “create” button this will generate a JSON key in the form of a .json file which automatically downloads to your computer. Google displays a dire warning as well stating that should anyone gain access to this file they will also gain access to your Google Cloud resources and could use it to charge usage to your account.

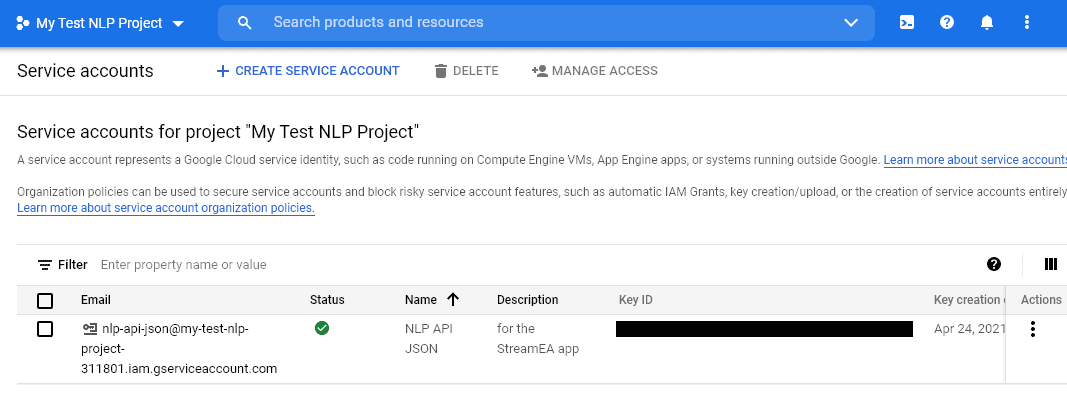

When you go back to your service account list (“service accounts”) you will see there is now a Key ID appearing for the service account we created for this project. It will look something like the below screenshot (actual Key ID redacated).

You now have your Google Cloud project set up and ready to use for the StreamEA app to leverage Google’s Natural Language Processing API to find keywords.

The SEO Portion Starts Here

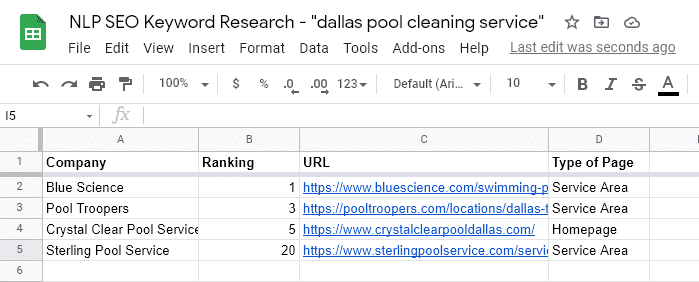

For the sake of this example we are going to look at the term “dallas pool cleaning service” and we selected a business that doesn’t appear to rank well on this term to use as the example client (they are not a client of ours), that business will be Sterling Pool Service. Sterling Pool Service currently ranks #20 on “dallas pool cleaning service” and the company Blue Science ranks #1 for the query. We are not performing any experimental actions, only examining the content of these two website’s pages using the Google NLP API.

While we won’t be doing any work to change the example client’s ranking positions, we have still archived the SERPs as we found them prior to publishing this article. SERPs Page 1, SERPs Page 2

Step 5: Find Competitor Pages Outranking Yours

Now that we are ready to do the SEO portion of our work we are going to start off by gathering the competitor pages we want to examine and build out a simple spreadsheet either in Excel or Google Drive.

Take a keyword you are interested in ranking higher on and type it into Google. Now look for the top 3 organic search listings. We are only interested in looking at Featured Snippets and classic search listings. Of note you should not be looking at the Paid Ads, Local Pack, People Also Ask, Video, News, Tweets, Knowledge Panel, or other things Google displays for a result. You may also want to avoid looking at aggregators and make sure the types of content you are looking at are germane to each other, meaning don’t compare a Yelp listing page to your homepage or a businesses service area page to a blog post listing the top local service providers. In this example we do include one homepage that outranks our example client.

I would recommend looking only at the top ranking page first and keeping your data sorted by current ranking position as you found them. For highly competitive search terms we might look at the top 3 results for low competition terms we might only look at the first ranking position.

Once you have the pages build a small spreadsheet including the Name, Ranking Position, URL, and Type of Page/Document. This is an index we can refer back to later to help keep things a little organized. Our example looks like this:

Step 6: Upload Your Google Cloud JSON Key

Remember all of that work we put into getting a JSON key from Google’s Cloud Platform to use for this? It is about to pay off. Head over to the StreamEA app which you can find here: https://streamea-entity-analyzer.herokuapp.com/

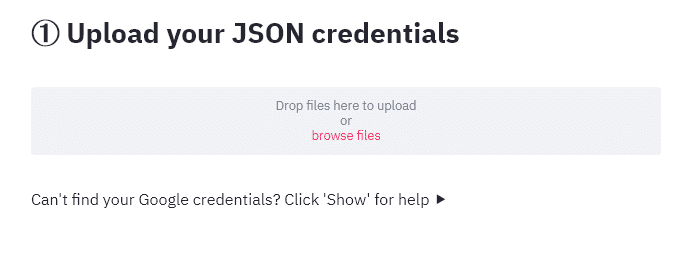

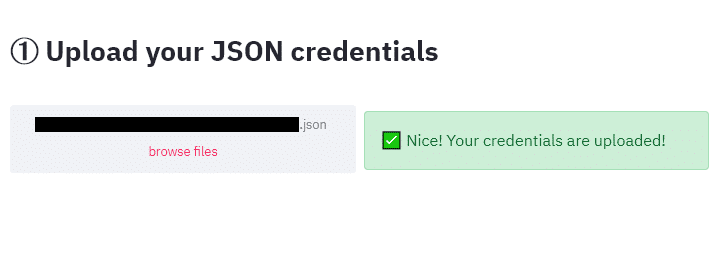

StreamEA has this as Step 1, but I have moved it to Step 6 assuming most SEOs, marketers, or business owners reading this don’t already have an active Google Cloud Platform account, a project created, and a JSON key ready to go. Scroll down just a little on the page and you’ll see a button appear to upload your credentials.

The tool says “credentials” and that may throw you a bit, but it is the same thing as your JSON key that we made earlier.

If you did this part right StreamEA will give you a success message.

As of publication StreamEA is still in beta test and the JSON key is removed after each session. That means you have to upload it each time before using this tool.

Step 7: Compare Your Page With The First Competitor Page

We finally have Google Cloud and StreamEA configured and we have our set of webpages to examine including the one we hope will we can improve by leveraging this natural language processing API. We can now start getting some, hopefully, useful data out of it.

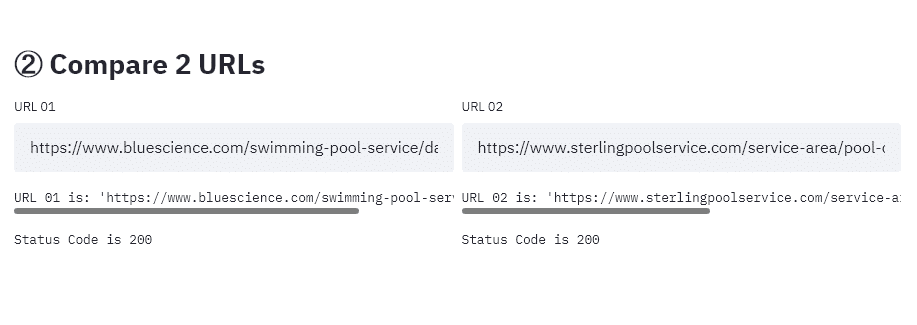

For the first scan we are going to use the #1 ranking competitor page and our example client page which is ranking down at #20. When we add those here in StreamEA’s Step 2 the tool also gives you a status code, a nice touch to quickly stop you from trying to scan a 404 page as Google will probably charge you for that too.

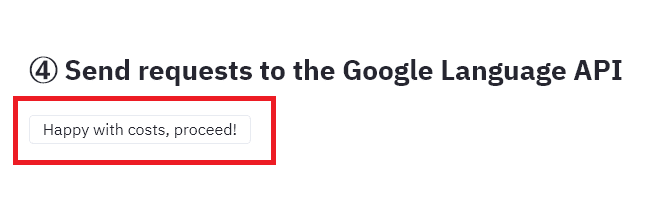

The tool offers a chance to examine the cost this scan might incur from Google, but right now everything for us is free so skip over that step and lets get on to seeing some data! Click the button that says “Happy with costs, Proceed!”

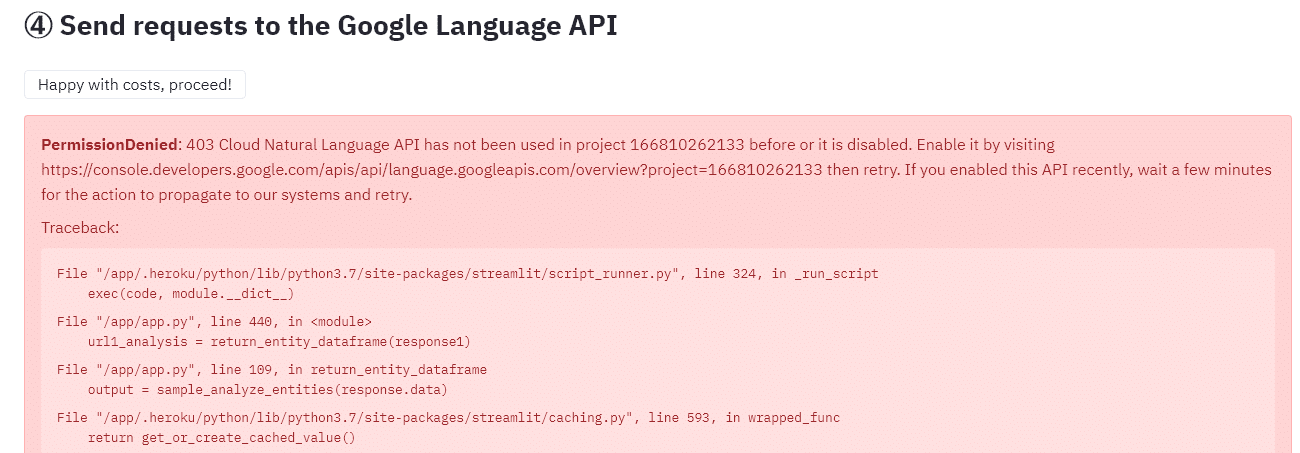

Step 8: Fix the “PermissionDenied: 403 Cloud Natural Language API has not been used in project” Error

It would seem we did not get everything setup and working in the first 4 steps on Google Cloud. This one sneaks in on you and I placed it here to ensure I was able to write about it and help you fix it.

Your error might look something like this:

And say something like this: “PermissionDenied: 403 Cloud Natural Language API has not been used in project xxxxxxxxxxxx before or it is disabled. Enable it by visiting https://console.developers.google.com/apis/api/language.googleapis.com/overview?project=xxxxxxxxxxxx then retry. If you enabled this API recently, wait a few minutes for the action to propagate to our systems and retry.”

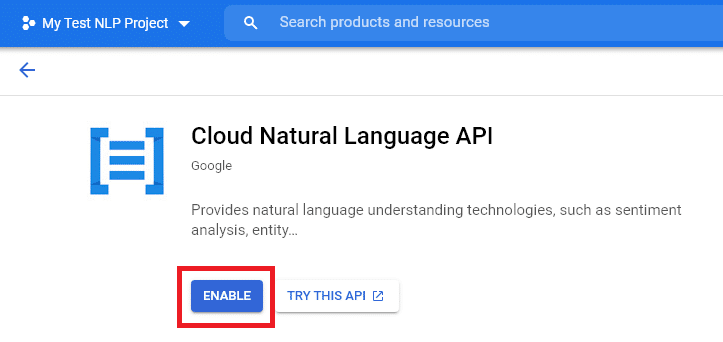

To fix this error make sure you are logged in to the Google account where the Google Cloud Platform account is at that you are using for this project, then in a new tab follow the link in the PermissionDenied error readout as shown above. This link should take you to your Google Cloud Project’s APIs & Services page for the Cloud Natural Language API where it will ask if you want to enable it or not. This looks something like the below screenshot, click the blue “enable” button but make sure you are in the right project first.

Once you enable the Cloud Natural API go back to the StreamEA app and wait a few minutes, between 2 and 5 is typical. Google says this is to allow the changes to propagate through their cloud platform system. Get a cup of coffee and stretch and it should be ready when you get back.

Step 9: Compare Your Page With The First Competitor Page (for real this time)

I know this has been an exhausting setup, but you are really close to the payoff, get your reward!

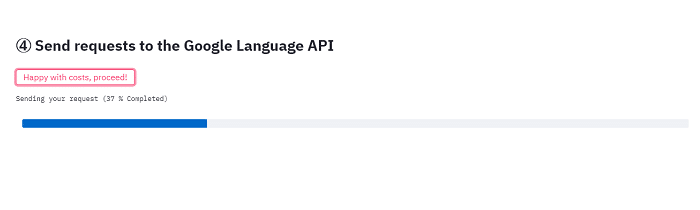

Click the “Happy with costs, Proceed!” button again and this time you should see your error message fade away and get replaced by a blue progress indicator.

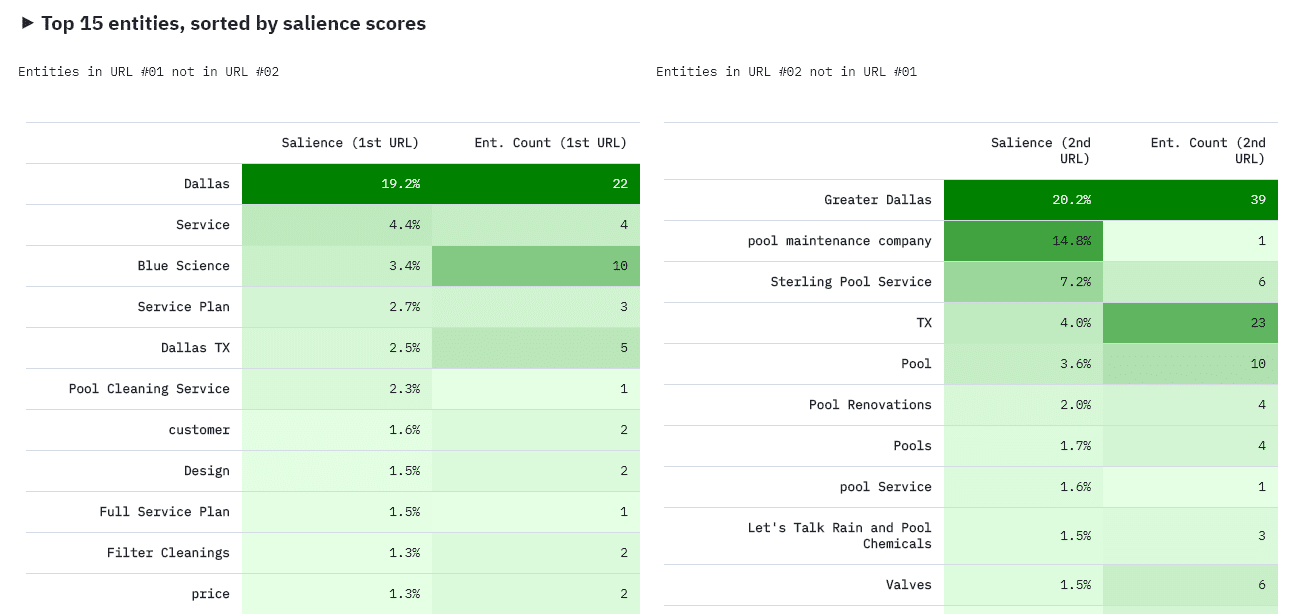

When completed the StreamEA tool spits out 3 sections of data:

- Top 15 entities, sorted by salience scores

- All Results (URL 01 + 02)

- Download CSVs

We are intersted in the first and third sections mostly. The first section provides some nice, color-coded results showing what keywords are used by the top competitor (URL 1) and not our example client (URL 2). In our example comparisson the example client site has the entity “Greater Dallas” appearing on their page while the top competitor has the entity of “Dallas” showing on theirs. Our example client also does not use the phrase “pool cleaning service” which appears to have some importance for the top competitor’s content. The data is sorted here by “salience” score, i.e. what Google’s NLP thinks is most relevant to the provided text. It also provides the number of times a known entity is mentioned in each page’s content.

Here is a screenshot of our example

If you want a quick view or idea of what might be happening this can definitely help out. Our example client appears to clearly be missing the mark on some entity usage on their page that could help them rank higher.

Step 10: Pull Data and Place in Spreadsheets

We are now going to do something with all of this wonderful data we have. First however, we need to make sure we get the data into a more useful format and we want to catalog it for future reference or to help the client better understand what is happening.

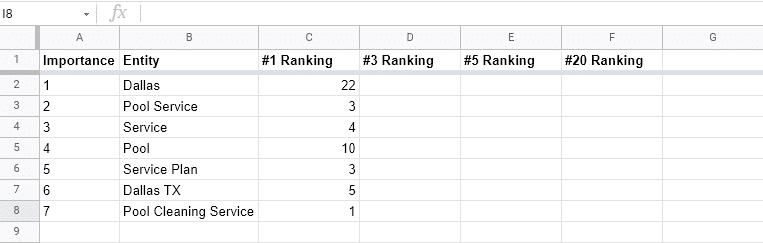

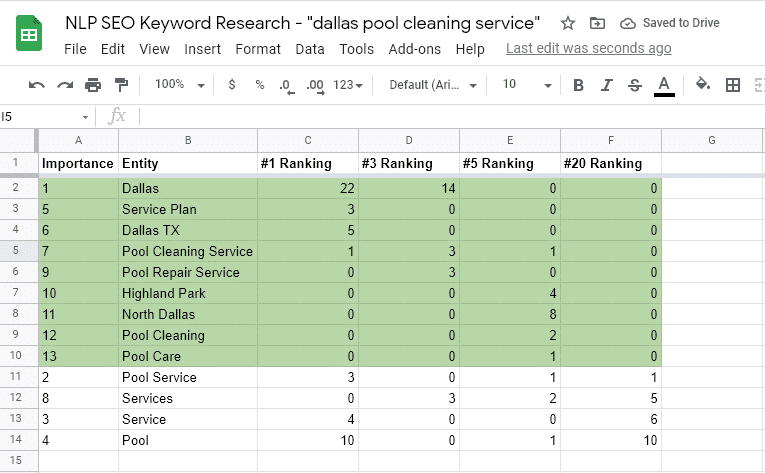

For the first part of this we are going to examine the relationship of the top keywords (by salience) not used by our example client and rankings. Create a new tab in your Google Drive or Excel spreadsheet and call it something like “Entities by Page Ranking”. On the left-hand column place all prominent entities for our top competitor in order of salience value. In our example we determined any entity with a salience over 2% for our top competitor should be examined. Now insert a column to the left of this and label it “Importance” and score your entities in order from 1 to ??? in order of most to least important (i.e. most salience to least).

Along the top of the columns to the right place your competitors name or their position rank as column labels.

Finally we are going to record the count or number of times an entity appears in a document in the column underneath the page’s ranking value (or name) and in the row aligned to the entity. Our first column with our top competitor looks something like this.

Be sure to download the CSV files or copy all of the data off of each page before you continue on as we will need to perform a few more scans to get all of the data we need.

Here is what our Entity County by Page Ranking looks like after all entities with a salience higher than 2% are added and their counts are placed in the sheet. Sorted with the example client’s column by zero we can clearly see what entities we should consider adding as keywords to our page to help it rank better.

If this was my client I would make sure we added neighborhoods of Dallas to their page, improved our usage of the entity Dallas instead of Greater Dallas, and better defined the services they offer in way that those services might be seen as entities.

Step 11: Build 2 More Types of Spreadsheets for Analysis

The Entity Count by Page Ranking helps us understand the most important entities (keywords) on a high-ranking page and how frequently they are used within that text. This might sound a lot like an older school concept known as “keyword density” where SEOs would use tools to examine how frequently a keyword appeared in a given text. It might also sound like “keyword placement” or “keyword pattern” concepts used by tools such as Page Optimizer Pro and CORA to help you replicate the placement patterns of keywords in a given text. However, here we are examining usage and importance throughout an entire text without regards to density or placement position and we are examining Entities as understood by a Natural Language system not simply keywords. Tools like Page Optimizer Pro do claim to use the Google Cloud NLP API but only offer it to Agency-level clients at the moment and CORA’s website does not make any mention of this usage.

We know that Google’s rankings use NLP to help determine semantically related keywords, and semantically related entities. We also know that Google and other engines may keep a corpus of some sort that defines these relationships but that is not published publicly. This makes data from the Google Cloud NLP API the closest to actually getting data Google’s search algorithms might use for ranking or filtering purposes. For the moment StreamEA is the only free tool on the market that grants this data access and provides the output in useful formats.

To provide a more advanced understanding we want to examine the data in a few more ways:

- An Entity Usage Matrix

- Entity Salience by Page Ranking

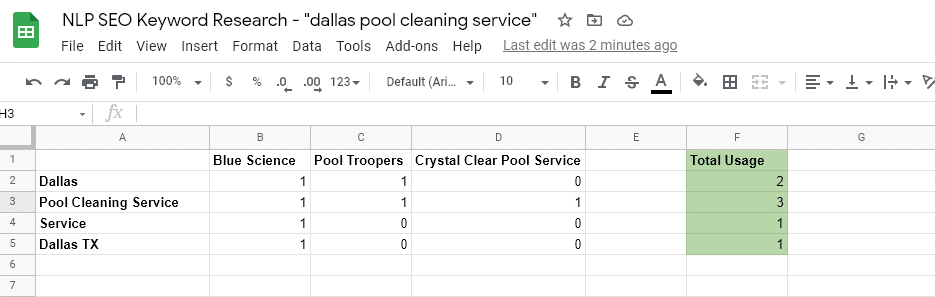

Entity Usage Matrix:

This is a simple matrix designed to show us if a document uses an important entity in its content or not. The heading of your columns here can be the brand name or page’s current ranking position and the left-hand column should be the entity or keyword being examined. Like our previous document we are not going to list every entity here (though you can) we are only going to list the top entities by salience.

Now find where an entity and page meet and enter into this cell a “1” if the document in the column label uses this entity in its content (leave a cell blank or place a “0” if unused). Our goal is to find entities used most frequently among the top ranking pages that are not used by our page. For this reason we are not including our example client here.

Create a column to the right of your matrix and use it to add up the count of times an important entity is used in high ranking competitor documents. Your final result should look something like this:

A quick note here: For our purposes we are counting all capitalization versions as the same entity even though Google’s NLP API separates them out as does the StreamEA tool (i.e. “Pool Cleaning Service” and “pool cleaning service” as the same thing). A Natural Langauge system may use capitalization of words in a phrase to signify a separate entity and/or the importance of an entity or it may not. We did this for simplicity sake in this example, you may want to examine and track different capitalizations of an entity separately for more granular understanding.

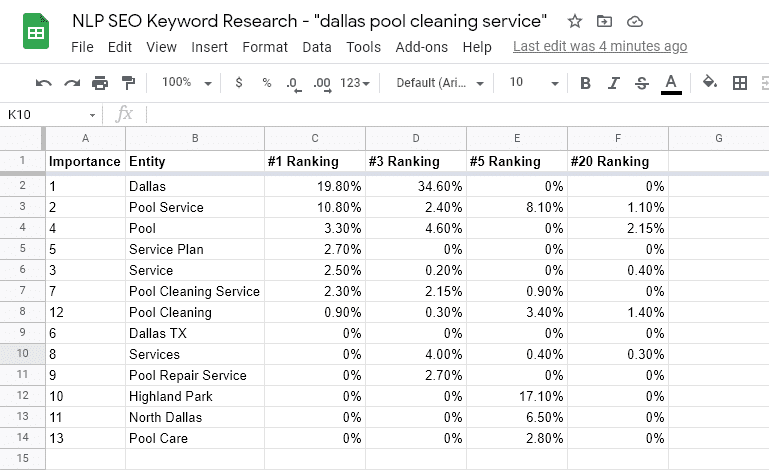

Entity Salience by Page Ranking:

Our final spreadsheet will be to quickly examine how important our most important entities are to each of the documents we are studying. This will help set benchmarks for salience as we edit our own content and examine it later on using the Google NLP API. Because we are combining various casings of entities as one make sure to add their salience scores and then divide by the total number of entities (not entries). In our example Crystal Clear Pool Service has a 10.4% salience rating for “pool service” and a 5.8% rating for “Pool Service” combining them and then dividng by 2 gives us an average rating of 8.1%. We divided down to find the ‘average salience’ in order to keep from over exaggerating the importance of an entity in case this methodology is less representative of how an engine actually uses Natural Language Processing to understand entities on a page.

When you are finished you should have a sheet that looks something like this one below:

A few things to note here:

- When sorted by highest salience for the top ranking document we can clearly see the deficiencies of our example client’s content (position 20). Instead of looking at the data by count of entity we can see that our client page has a 0% rating for “Dallas” while the top 2 have over a 19% salience rating for this entity.

- Because we added all casing types of entities we were able to uncover that our client does indeed discuss things like “pool service”, “pool”, and “pool cleaning” in their content; however, their usage is not deemed very important by Google’s Natural Language system. This is especially truen when compared to at least one of our top ranking competitors.

- We did not originally download our data for the top ranking competitor and our first scan showed “Dallas TX” and “Pool Repair Service” as top entities (over 2% salience). However, upon a second scan both failed to even show up for this document. Google’s NLP is constantly changing and updating and you will find oddities like this in your own data. Like many other SEO tools, it may be best to take multiple readings before taking more drastic action.

Step 12: Practical Applications of NLP Data

Just becuase a fancy NLP system says something, doesn’t make it 100% true. Our final task will be to examine our example client’s content and look for ways to apply what we have learned to improve the content.

To avoid interfering with this market we are not linking to any of the examined pages or posting any of their content here. You can view full screen capture images by clicking the following links: Blue Science (#1 ranking), Pool Troopers (#3 ranking), Crystal Clear Pool Service (#5 ranking), Sterling Pool Service (#20 ranking – example client).

The example client’s content breaks down like this:

- Heading (h1)

- Service Description (3 paragraphs)

- Sub-heading 1 (h3)

- Standard Contact CTA

- Sub-heading 2 (h2)

- General paragraph about the target area

- Recent Reviews from area customers

- Recent Testimonials from area customers

- Log of recently completed jobs from area

- List of recent blog posts

The page layout is not awful and shows some effort has been put into it, possible it even ranked well for this query years ago. However, there are glaring omissions that we can see plainly now comparing the data from our NLP scans to the content that appears on the page.

In the first portion of the content the term “Dallas” is never used on its own, it is always written as “Dallas, TX” or “Greater Dallas, TX”. This might not be impacting search results, but it feels incredibly formal for something that should feel more local. The page breadcrumb also spells the entity in a lowercase “dallas tx”.

The service description paragraphs list off services offered by the example client, but offer few details about those services or why a consumer should pick this company. As the NLP data shows, there are also key services missing here such as “pool cleaning service”.

Our example client is missing what could be a critical element on their page, a price list or service plan description. We see this arise in the salience data for our top competitor page where “service plan” has a 2.70% salience rating. However, the pricing information actually appears on the two top most competitor pages. While we could have seen this with the naked eye, our NLP data shows us that by providing service pricing and by defining the service pricing with an entity name we might be able to improve our rankings.

Finally, a quick look at the top competitor reveals another crucial element of the page that might be missing, neighborhoods in the city of Dallas serviced by the company. We saw this sort of arise in our NLP data but a quick review of each page shows that adding this may help the example client rank higher for our more generic search, especially if they cover neighborhood entities associated with the city by an engine.

Frequently Asked Questions

Question: Why Use the Google Cloud Natural Language API?

We know that Google’s rankings use NLP to help determine semantically related keywords, semantically related entities, and to identify entities a consumer might consider important. We also know that Google and other engines may keep a corpus of some sort that defines these relationships but that is not published publicly. This makes data from the Google Cloud NLP API the closest to actually getting data Google’s search algorithms might use for ranking or filtering purposes. For the moment StreamEA is the only free tool on the market that grants this data access and provides the output in useful formats.

Question: Could I use Another NLP System?

You could certainly use another system if you want, but the tool discussed here only uses the Google Coud NLP API. You can find more NLP Libraries in this article from Toward Data Science.

Question: How much does the StreamEA tool cost?

This tool is currently free, but I anticipate it will be a paid tool soon. You may have to pay Google if you use more than 5,000 units per month.

Question: Why doesn’t this tool tell me exacty how to use an entity or where in my content to put it?

As discussed earlier keyword placement / pattern is the domain of tools like Page Optimizer Pro and CORA. This tool only shows you want entities are likely not being included and how important those entities are to your competitor’s documents which are outranking yours.

Question: Will this tool track my entities or salience over time?

For now that answer is “No” and since I am not the developer I cannot answer this for you. Charly’s Twitter is at the top of this article and his website is listed below.

Question: Could I use this data for link building?

Answer, probably. I am experimenting with this usage now and will write a guide in the future.

Acknowledgements

We were already using the Google Cloud NLP API to extract useful data when the StreamEA tool came along. It has a lot of promise and I hope Charly continues to improve it. You should check out his website CharlyWargnier.com and hire him maybe? He also appears to be working on other tools for SEO.

Ben Garry, a UK SEO at Impression agency, wrote an incredibly helpful piece on NLP, Entities, and Salience back in 2019. I reference it often and used it as a guide to help me in leveraging the StreamEA app’s data output. Read the article “What is entity salience and why should SEOs care?”

Streamlit this appears to be the application builder used by Charly for the StreamEA tool. Worth a look for the developers out there exploring ways to use Python for SEO or other needs.